AWS Certified Solution Architect & Developer & SysOps Administrator Associate

Links

IAM

Users & Groups

IAM: Identity and Access Management, A Global service

- Root account created by default, shouldn’t be used or shared

- Users are people within your organization, and can be grouped

- Groups only contain users, not other groups

- Users don’t have to belong to a group, and user can belong to up to 10 groups

- The user gains the permissions applied to the group through the policy

Note

- IAM Users and Roles are IAM Identities, while User Groups are not and cannot be authenticated or authorized

- User groups are used for collecting users with common needs and then applying IAM permissions policies to them

Policy

- Policies are documents that define permissions and are written in JSON

- Identity-based policies can be applied to users, groups, and roles

- All permissions are implicitly denied by default

- In AWS you apply the least privilege principle: don’t give more permissions than a user needs

Types of Policy

- Identity-based policies

- Attached to users, groups, or roles

- Control what actions an identity can perform, on which resources, and under what conditions

- Resource-based policies – attached to a resource; define permissions for a principal accessing the resource

- IAM permissions boundaries – an advanced feature in which you set the maximum permissions that an identity-based policy can grant to an IAM entity

- Access control lists (ACLs) – control which principals in other accounts can access the resource to which the ACL is attached

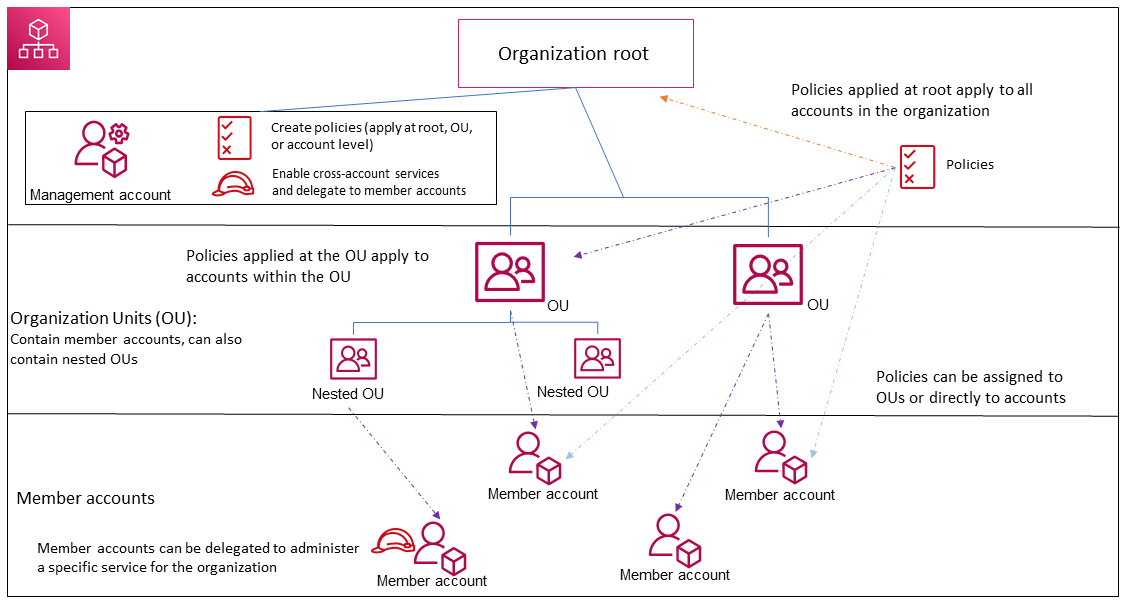

- AWS Organizations service control policies (SCP) – specify the maximum permissions for an organization or OU

- Session policies – used with

AssumeRoleAPI actions

Determination Rules

- By default, all requests are implicitly denied (though the root user has full access)

- An explicit allow in an identity-based or resource-based policy overrides this default

- If a permissions boundary, Organizations SCP, or session policy is present, it might override the allow with an implicit deny

- An explicit deny in any policy overrides any allows

IAM Policies Structure

Version: policy language version, always include "2012-10-17"Id: an identifier for the policy(optional)Statement: one or more individual statements(required)Sid: an identifier for the statement(optional)Effect: whether the statement allows or denies access (Allow, Deny)Principal: account | user | role to which this policy applied toAction: list of actions(List, Read, Permissions Management, Write, and Tagging)Resource: list of resources to which the actions applied toCondition: conditions for when this policy is in effect (optional)

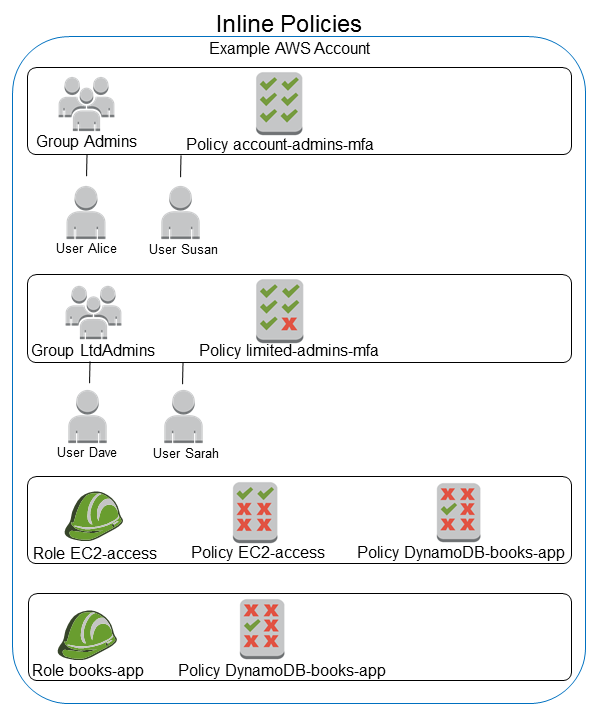

Inline vs Managed Policies

- AWS Managed Policy

- Maintained by AWS

- Good for power users and administrators

- Updated in case of new services / new APIs

- Customer Managed Policy

- Best Practice, re-usable, can be applied to many principals

- Version Controlled + rollback, central change management

- Inline

- Strict one-to-one relationship between policy and principal

- Policy is deleted if you delete the IAM principal

An inline policy is a policy that's embedded in an IAM identity (a user, group, or role)

IAM Security Tools

Credentials Report(account-level)- A report that lists all your account's users and the status of their various credentials

Access Advisor(user-level)- Access advisor shows the service permissions granted to a user and when those services were last accessed.

- You can use this information to revise your policies.

IAM Roles

Some AWS service will need to perform actions on your behalf -> To do so, we will assign permissions to AWS services with IAM Roles

- An IAM role is an IAM identity that has specific permissions

- Roles are assumed by users, applications, and services

- Once assumed, the identity "becomes" the role and gain the roles’ permissions

- Common roles:

- EC2 Instance Roles

- Lambda Function Roles

- Roles for CloudFormation

AWS STS – Security Token Service

- Allows to grant limited and temporary access to AWS resources (up to 1 hour).

- Credentials include:

- AccessKeyId

- Expiration

- SecretAccessKey

- SessionToken

- Trust policies control who can assume the role

- Temporary credentials are used with identity federation, delegation, cross-account access, and IAM roles

- APIs:

sts:AssumeRole: Assume roles within your account or cross accountsts:AssumeRoleWithSAML: return credentials for users logged with SAMLsts:AssumeRoleWithWebIdentity- return creds for users logged with an IdP (Facebook Login, Google Login, OIDC compatible…)

- AWS recommends against using this, and using Cognito Identity Pools instead

sts:GetSessionToken: for MFA, from a user or AWS account root usersts:GetFederationToken: obtain temporary creds for a federated usersts:GetCallerIdentity: return details about the IAM user or role used in the API callsts:DecodeAuthorizationMessage: decode error message when an AWS API is denied

IAM Guidelines & Best Practices

- Don’t use the root account except for AWS account setup

- One physical user = One AWS user

- Assign users to groups and assign permissions to groups

- Create a strong password policy

- Use and enforce the use of Multi Factor Authentication (MFA)

- Create and use Roles for giving permissions to AWS services

- Use Access Keys for Programmatic Access (CLI / SDK)

- Audit permissions of your account with the IAM Credentials Report

- Never share IAM users & Access Keys

- Never ever store IAM key credentials on any machine but a personal computer or on-premise server

- On premise server best practice is to call

AWS Security Token Serviceto obtain temporary security credentials

EC2

EC2: Elastic Compute Cloud

EC2 Instance: AMI (OS) + Instance Size (CPU + RAM) + Storage + security groups + EC2 User Data

Sizing & Configuration Options

- Operating System (OS): Linux, Windows or MacOS

- Compute power & Cores (CPU)

- Random-access Memory (RAM)

- Storage Space:

- Network-attached (EBS & EFS)

- Hardware (EC2 Instance Store)

- Network Card: speed of the card, Public IP address

- Firewall Rules: security group

- Bootstrap Script (configure at first launch): EC2 User Data

EC2 User Data

- It is possible to bootstrap instances using an EC2 User data script

- Bootstrapping means launching commands when a machine starts

- The script only run once at the instance first start

- EC2 user data is used to automate boot tasks such as:

- Installing updates

- Installing software

- Downloading common files from the internet

- Anything you can think of

- The EC2 User Data Script runs with the root user

- Limited to 16 KB

- Batch and PowerShell scripts can be run on Windows

EC2 Meta Data

- Instance metadata is data about your EC2 instance

- Instance metadata is available at

http://169.254.169.254/latest/meta-data

Instance Types

- General Purpose:

- Great for a diversity of workloads such as web servers or code repositories

- Balance between Compute, Memory and Networking

- Compute Optimized:

- Great for compute-intensive tasks that require high performance processors

- Use cases:

- Batch processing workloads

- Media transcoding

- High performance web servers

- High performance computing (HPC)

- Scientific modeling & machine learning

- Dedicated gaming servers

- Memory Optimized:

- Fast performance for workloads that process large data sets in memory

- Use cases:

- High performance, relational/non-relational databases

- Distributed web scale cache stores

- In-memory databases optimized for BI (business intelligence)

- Applications performing real-time processing of big unstructured data

- Storage Optimized:

- Great for storage-intensive tasks that require high, sequential read and write access to large data sets on local storage

- Use cases:

- High frequency online transaction processing (OLTP) systems

- Relational & NoSQL databases

- Cache for in-memory databases (for example, Redis)

- Data warehousing applications

- Distributed file systems

Note

Naming convention:

Exp. m5.2xlarge

- m: instance class

- 5: generation

- 2xlarge: size within the instance class

Tip

Stopping and starting the instance will also move it to different underlying hardware

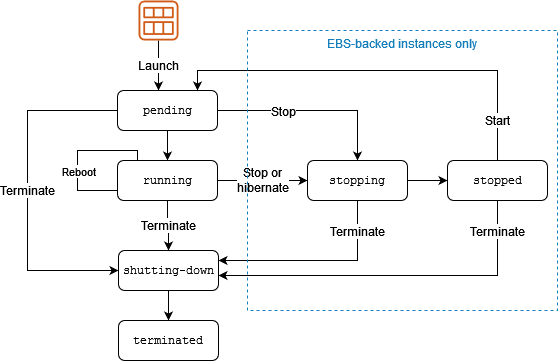

ECS Instance Lifecycle

Stopping EC2 instances

- EBS backed instances only

- No charge for stopped instances

- EBS volumes remain attached (chargeable)

- Data in RAM is lost

- Instance is migrated to a different host

- Private IPv4 addresses and IPv6 addresses retained; Public IPv4 addresses released

- Associated Elastic IPs retained

Hibernating EC2 instances

- We know we can stop, terminate instances

- Stop – the data on disk (EBS) is kept intact in the next start

- Terminate – any EBS volumes (root) also set-up to be destroyed is lost

- On start, the following happens:

- First start: the OS boots & the EC2 User Data script is run

- Following starts: the OS boots up

- Then your application starts, caches get warmed up, and that can take time!

- Introducing EC2 Hibernate:

- The in-memory (RAM) state is preserved

- The instance boot is much faster! (the OS is not stopped / restarted)

- Under the hood: the RAM state is written to a file in the root EBS volume

- The root EBS volume must be encrypted

- Applies to on-demand or reserved Linux instances

- Contents of RAM saved to EBS volume

- Must be enabled for hibernation when launched

- Use cases:

- Long-running processing

- Saving the RAM state

- Services that take time to initialize

- When started (after hibernation):

- The EBS root volume is restored to its previous state

- The RAM contents are reloaded

- The processes that were previously running on the instance are resumed

- Previously attached data volumes are reattached and the instance retains its instance ID

- When an EC2 instance is hibernated, the following are charged:

- EBS storage charges for in-memory data saved in EBS volumes

- Elastic IP address charges which are associated with an instance

- Good to know:

- Supported Instance Families – C3, C4, C5, I3, M3, M4, R3, R4, T2, T3, ...

- Instance RAM Size – must be less than 150 GB

- Instance Size – not supported for bare metal instances

- AMI – Amazon Linux 2, Linux AMI, Ubuntu, RHEL, CentOS & Windows...

- Root Volume – must be encrypted EBS, not instance store

- Available for On-Demand, Reserved and Spot Instances

- An instance can NOT be hibernated more than 60 days

Rebooting EC2 instances

- Equivalent to an OS reboot

- DNS name and all IPv4 and IPv6 addresses retained

- Does not affect billing

Retiring EC2 instances

- Instances may be retired if AWS detects irreparable failure of the underlying hardware that hosts the instance

- When an instance reaches its scheduled retirement date, it is stopped or terminated by AWS

Terminating EC2 instances

- Means deleting the EC2 instance

- Cannot recover a terminated instance

- By default root EBS volumes are deleted

Recovering EC2 instances

- CloudWatch can be used to monitor system status checks and recover instance if needed

- Applies if the instance becomes impaired due to underlying hardware / platform issues

- Recovered instance is identical to original instance

Security Groups

- Security groups only contain allow rules

- Security groups rules can reference by IP or by security group

- Security groups are acting as a "firewall" on EC2 instances

- They regulate:

- Type + Protocol

- Control of inbound network (from other to the instance)

- Control of outbound network (from the instance to other)

- Port Range

- Source: Authorised IP ranges – IPv4 and IPv6 / other security group

- Type + Protocol

- Can be attached to multiple instances

- Locked down to a

region/VPCcombination - Does live "outside" the EC2 – if traffic is blocked the EC2 instance won’t see it

- It’s good to maintain one separate security group for SSH access

- If your application is not accessible (time out), then it’s a security group issue

- If your application gives a "connection refused" error, then it’s an application error or it’s not launched

- All inbound traffic is blocked by default

- All outbound traffic is authorized by default

Instances Purchasing Options

On-Demand Instances

- Pay for what you use:

- Amazon Linux, Windows and Ubuntu: billing per second, after the first minute

- MacOS: billing per hour

- Commercial Linux distros such as Red Hat EL and SUSE ES use hourly pricing

- Highest cost but no upfront payment

- No long-term commitment

- Recommended for short-term and un-interrupted workloads, where you can't predict how the application will behave

Reserved Instance

- You reserve a specific instance attributes (Instance Type, Region,Tenancy, OS)

- Payment Options – No Upfront (+discount), Partial Upfront (++discount), All Upfront (+++discount)

- Reserved Instance’s Scope – Regional or Zonal (reserve capacity in an AZ)

- Recommended for steady-state usage applications (think database)

- You can buy and sell in the Reserved Instance Marketplace

- Standard RI - Change AZ, instance size (Linux), networking type - Use

ModifyReservedInstancesAPI - Convertible RI - Change AZ, instance size (Linux), networking type + Change family, OS, tenancy, payment option- Use

ExchangeReservedInstancesAPI

Savings Plans

- 1 or 3-year

- hourly commitment to usage of Fargate, Lambda, and EC2

- Any Region, family, size, tenancy, and OS

- 1 or 3-year

- hourly commitment to usage of EC2 within a selected Region and Instance Family

- Any size, tenancy and OS

Spot Instances

- The most cost-efficient instances in AWS

- 2-minute warning if AWS need to reclaim capacity – available via instance metadata and CloudWatch Events

- Not suitable for critical jobs or databases

- Useful for workloads that are resilient to failure

- Batch jobs

- Data analysis

- Image processing

- Any distributed workloads

- Workloads with a flexible start and end time

Spot Instance Types

- Spot Instance: One or more EC2 instances

- Spot Fleet: launches and maintains the number of Spot / On-Demand instances to meet specified target capacity

- EC2 Fleet: launches and maintains specified number of Spot / On-Demand / Reserved instances in a single API call

- Spot Block: Uninterrupted for 1-6 hours; Pricing is 30% - 45% less than On-Demand

Dedicated Hosts

- A physical server with EC2 instance capacity fully dedicated to your use

- Allows you address compliance requirements and use your existing server-bound software licenses (per-socket, per-core, per—VM software licenses)

- The most expensive option

- Purchasing Options:

- On-demand – pay per second for active Dedicated Host

- Reserved - 1 or 3 years (No Upfront | Partial Upfront | All Upfront)

- Useful for software that have complicated licensing model (BYOL – Bring Your Own License) Or for companies that have strong regulatory or compliance needs

Dedicated Instances

- Instances run on hardware that’s dedicated to you - no other customers will share your hardware

- May share hardware with other instances in same account

- No control over instance placement (can move hardware after Stop / Start)

Capacity Reservations

- Reserve On-Demand instances capacity in a specific AZ for any duration

- You always have access to EC2 capacity when you need it

- no time commitment (create/cancel anytime), no billing discounts

- Combine with Regional Reserved Instances and Savings Plans to benefit from billing discounts

- You’re charged at On-Demand rate whether you run instances or not

- Suitable for short-term, uninterrupted workloads that needs to be in a specific AZ

Spot Fleets

Spot Fleets = set of Spot Instances + (optional) On-Demand Instances

- The Spot Fleet will try to meet the target capacity with price constraints

- Define possible launch pools: instance type (Exp. m5.large), OS, Availability Zone

- Can have multiple launch pools, so that the fleet can choose

- Spot Fleet stops launching instances when reaching capacity or max cost

- Strategies to allocate Spot Instances:

lowestPrice: from the pool with the lowest price (cost optimization, short workload)diversified: distributed across all pools (great for availability, long workloads)capacityOptimized: pool with the optimal capacity for the number of instances

- Spot Fleets allow us to automatically request Spot Instances with the lowest price

AMI

AMI: Amazon Machine Image

- AMI are a customization of an EC2 instance

- You add your own software, configuration, operating system, monitoring...

- Faster boot / configuration time because all your software is pre-packaged

- AMI are built for a specific region (and can be copied across regions)

- You can launch EC2 instances from:

- Public AMIs: AWS provided, free to use, generally you just select the operating system you want

- AWS Marketplace AMIs: an AMI someone else made (and potentially sells) - generally come packaged with additional, licensed software

- Your own AMI: you make and maintain them yourself

- AMI Process(from an EC2 instance)

- Start an EC2 instance and customize it

- Stop the instance (for data integrity)

- Build an AMI – this will also create EBS snapshots

- Launch instances from other AMIs

- An AMI includes the following:

- One or more EBS snapshots OR for instance-store-backed AMIs - a template for the root volume of the instance

- Launch permissions that control which AWS accounts can use the AMI to launch instances

- A block device mapping that specifies the volumes to attach to the instance when it's launched

Tip

It's not possible to move an existing instance to another subnet, Availability Zone, or VPC.

Instead, you can manually migrate the instance by creating a new Amazon Machine Image (AMI) from the source instance.

Then, launch a new instance using the new AMI in the desired subnet, Availability Zone, or VPC.

Finally, you can reassign any Elastic IP addresses from the source instance to the new instance.

EC2 Image Builder

- Used to automate the creation of Virtual Machines or container images

- Automate the creation, maintain, validate and test EC2 AMIs

- Can be run on a schedule (weekly, whenever packages are updated, etc...)

- Free service (only pay for the underlying resources)

EC2 Instance Store

high-performance hardware disk

- Better I/O performance

- EC2 Instance Store lose their storage if they’re stopped (ephemeral)

- Good for buffer / cache / scratch data / temporary content

- Risk of data loss if hardware fails

- Backups and Replication are your responsibility

- Instance Store volumes are physically attached to the host

Placement Groups

Control over the EC2 Instance placement strategy

Cluster

clusters instances into a low-latency group in a single Availability Zone

- Pros: Great network (10 Gbps bandwidth between instances with Enhanced Networking enabled - recommended)

- Cons: If the rack fails, all instances fails at the same time

- Use case:

- Big Data job that needs to complete fast

- Application that needs extremely low latency and high network throughput

Spread

spreads instances across underlying hardware (max 7 instances per group per AZ)

- Pros:

- Can span across AvailabilityZones (AZ)

- Reduced risk is simultaneous failure

- EC2 Instances are on different physical hardware

- Cons:

- Limited to 7 instances per AZ per placement group

- Use case:

- Application that needs to maximize high availability

- Critical Applications where each instance must be isolated from failure from each other

Partition

spreads instances across many different partitions (which rely on different sets of racks) within an AZ. Scales to 100s of EC2 instances per group (Hadoop, Cassandra, Kafka)

- Up to 7 partitions per AZ

- Can span across multiple AZs

- Up to 100s of EC2 instances

- The instances in a partition do not share racks with the instances in the other partitions

- A partition failure can affect many EC2 but won’t affect other partitions

- EC2 instances get access to the partition information as metadata

- Use cases: HDFS, HBase, Cassandra, Kafka

Rules and Limitations

- Placement groups can't cross regions

- An instance can be launched in one placement group at a time; it cannot span multiple placement groups

- You can't merge placement groups

- A cluster placement group can't span multiple Availability Zones

- A partition placement group supports a maximum of 7 partitions per Availability Zone

- A rack spread placement group supports a maximum of 7 running instances per Availability Zone

Network Interfaces (ENI, ENA, EFA)

ENI - Elastic Network Interface

Logical component in a VPC that represents a virtual network card

- The ENI can have the following attributes:

- Primary private IPv4, one or more secondary IPv4

- One Elastic IP (IPv4) per private IPv4

- One Public IPv4(optional)

- One or more security groups

- A MAC address

- You can create ENI independently and attach them on the fly (move them) on EC2 instances for failover

- Additional ENIs can be attached from subnets within the same AZ

- The primary network interface has a private IP and optionally a public IP

- You cannot attach ENIs from subnets in different AZs

- Bound to a specific availability zone (AZ)

- Can use with all instance types

ENA - Elastic Network Adapter

- Enhanced networking performance

- Higher bandwidth and lower inter-instance latency

- Must choose supported instance type

EFA - Elastic Fabric Adapter

- Use with High Performance Computing and MPI and ML use cases

- Tightly coupled applications

- Can use with all instance types

Public, Private and Elastic IP addresses

- Public IP Address:

- Lost when the instance is stopped

- Used in Public Subnets

- No charge

- Associated with a private IP address on the instance

- Cannot be moved between instances

- Private IP Address:

- Retained when the instance is stopped

- Used in Public and Private Subnets

- Elastic IP Address:

- Static Public IP address

- You are charged if not used

- Associated with a private IP address on the instance

- Can be moved between instances and Elastic Network Adapters

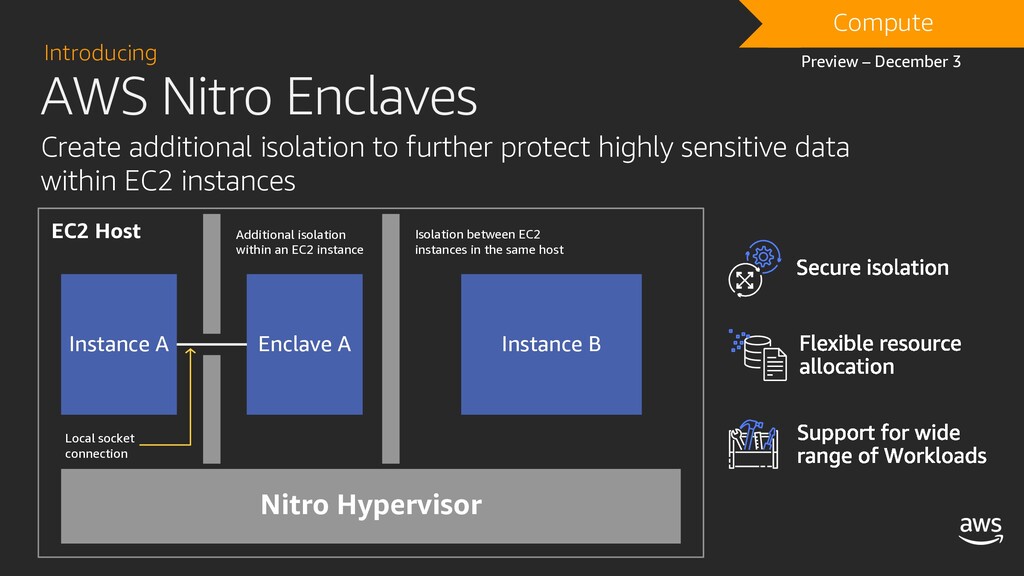

AWS Nitro System

Nitro is the underlying platform for the next generation of EC2 instances

- Support for many virtualized and bare metal instance types

- Breaks functions into specialized hardware with a Nitro Hypervisor

- Specialized hardware includes:

- Nitro cards for VPC

- Nitro cards for EBS

- Nitro for Instance Storage

- Nitro card controller

- Nitro security chip

- Nitro hypervisor

- Nitro Enclaves

- Improves performance, security and innovation:

- Performance close to bare metal for virtualized instances

- Elastic Network Adapter and Elastic Fabric Adapter

- More bare metal instance types

- Higher network performance (e.g. 100 Gbps)

- High Performance Computing (HPC) optimizations

- Dense storage instances (e.g. 60 TB)

AWS Nitro Enclaves

- Isolated compute environments

- Runs on isolated and hardened virtual machines

- No persistent storage, interactive access, or external networking

- Uses cryptographic attestation to ensure only authorized code is running

- Integrates with AWS Key Management Service (KMS)

- Protect and securely process highly sensitive data:

- Personally identifiable information (PII)

- Healthcare data

- Financial data

- Intellectual Property data

EBS

An EBS (Elastic Block Store) Volume is a network drive you can attach to your instances while they run

- It’s a network drive (i.e. not a physical drive)

- It uses the network to communicate the instance, which means there might be a bit of latency

- It can be detached from an EC2 instance and attached to another one quickly

- It’s locked to an Availability Zone (AZ)

- To move a volume across, you first need to snapshot it

- Have a provisioned capacity (size in GBs, and IOPS)

- You get billed for all the provisioned capacity

- You can increase the capacity of the drive over time

- Delete on Termination attribute:

- Controls the EBS behaviour when an EC2 instance terminates

- By default, the root EBS volume is deleted (attribute enabled)

- By default, any other attached EBS volume is not deleted (attribute disabled)

- This can be controlled by the AWS console / AWS CLI

Resizing EBS Volumes

After you increase the size of an EBS volume, use the Windows Disk Management utility or PowerShell to extend the disk size to the new size of the volume.

You can begin resizing the file system as soon as the volume enters the optimizing state.

EBS Snapshots

- Make a backup (snapshot) of your EBS volume at a point in time

- Not necessary to detach volume to do snapshot, but recommended

- Can copy snapshots across AZ or Region

- EBS Snapshot Archive:

- Move a snapshot to an "archive tier" which is cheaper

- Takes within 24 to 72 hours for restoring the archive

- EBS Snapshots Features:

- EBS Snapshot Archive

- Move a Snapshot to an 'archive tier' that is much cheaper

- Takes within 24 to 72 hours for restoring the archive

- Recycle Bin for EBS Snapshots

- Setup rules to retain deleted snapshots so you can recover them after an accidental deletion

- Specify retention(from 1 day to 1 year)

- Fast Snapshot Restore(FSR)

- Force full initialization of snapshot to have no latency on the first use($$$)

- Fast Snapshot restore (FSR) needs to be enabled per Availability Zone

- This can be enabled on both existing as well as new Snapshots

- EBS Snapshot Archive

Tip

Amazon EBS direct APIs can be used to create EBS snapshots, write data directly to snapshots, read data from snapshots, and identify the difference between two snapshots.

Note

There can be an initial performance hit when an Amazon EBS volume is created from snapshots. This can be avoided by either of the following:

- Force the immediate initialization of the entire volume.

- Enable fast snapshot to restore on a snapshot to ensure that the EBS volumes are fully initialized.

EBS Volume Types

- EBS Volumes come in 6 types

- gp2 / gp3 (SSD): General purpose SSD volume that balances price and performance for a wide variety of workloads

- io1 / io2 (SSD): Highest-performance SSD volume for mission-critical low-latency or high-throughput workloads

- st1 (HDD): Low cost HDD volume designed for frequently accessed, throughput-intensive workloads

- sc1 (HDD): Lowest cost HDD volume designed for less frequently accessed workloads

- EBS Volumes are characterized in Size | Throughput | IOPS (I/O Ops Per Sec)

- Only gp2/gp3 and io1/io2 can be used as boot volumes

EBS vs Instance Store

- EBS volumes are attached over the network, while Instance Store volumes are physically attached to the host and offer high - performance

- EBS volumes offer persistent storage, while Instance Store volumes are ephemeral(non-persistent)

EBS Multi-Attach – io1/io2 family

- Attach the same EBS volume to multiple EC2 instances in the same AZ

- Available for Nitro system-based EC2 instances

- Up to 16 instances can be attached to a single volume

- Each instance has full read & write permissions to the volume

- Use case:

- Achieve higher application availability in clustered Linux applications

- Applications must manage concurrent write operations

- Must use a file system that’s cluster-aware (not XFS, EX4, etc...)

Copying and Sharing AMIs and Snapshots

- Encrypted Snapshot -> Encrypted AMI

- Can be shared with other accounts(custom key only)

- Cannot be shared publicly

- Encrypted AMI -> Encrypted AMI

- Can change encryption key

- Can change region

- Encrypted AMI -> EC2 Instance

- Can change encryption key

- Can change AZ

- Unencrypted AMI -> EC2 Instance

- Can change encryption state

- Can change AZ

- Encrypted Snapshot -> Encrypted Volume

- Can be encrypted

- Can change AZ

Amazon Data Lifecycle Manager(DLM)

- DLM automates the creation, retention, and deletion of EBS snapshots and EBS-backed AMIs

- DLM helps with the following:

- Protects valuable data by enforcing a regular backup schedule

- Create standardized AMIs that can be refreshed at regular intervals

- Retain backups as required by auditors or internal compliance

- Reduce storage costs by deleting outdated backups

- Create disaster recovery backup policies that back up data to isolated accounts

EBS Encryption

- When you create an encrypted EBS volume, you get the following:

- Data at rest is encrypted inside the volume

- All the data in flight moving between the instance and the volume is encrypted

- All snapshots are encrypted

- All volumes created from the snapshot

- Encryption and decryption are handled transparently (you have nothing to do)

- Encryption has a minimal impact on latency

- EBS Encryption leverages keys from KMS (AES-256)

- Copying an unencrypted snapshot allows encryption

- Snapshots of encrypted volumes are encrypted

Using RAID with EBS

RAID stands for Redundant Array of Independent disks

- Not provided by AWS, you must configure through your operating system

- RAID 0 and RAID 1 are potential options on EBS

- RAID 0 is used for striping data across disks (performance)

- RAID 1 is used for mirroring data across disks (redundancy / fault tolerance)

- RAID 5 and RAID 6 are not recommended by AWS

EFS

EFS: Elastic File System

- Managed NFS (network file system) that can be simultaneously mounted on thousands of EC2

- Appliances are known as Network Attached Storage (NAS) devices for both SMB / CIFS and NFS

- Can connect instances from other VPCs and on-premises computers

- EFS works with Linux EC2 instances in multi-AZ - POSIX file system that has a standard file API

- Encryption at rest using KMS can be enabled when creating the file system

- Encryption during transit can be enabled when mounting the file system using the

Amazon EFS mount helper. The mount helper uses TLS version 1.2 to communicate with the file system. - Highly available, scalable, expensive (3x gp2), pay per use, no capacity planning

- EFS Infrequent Access (EFS-IA): Storage class that is cost-optimized for files not accessed every day

- Use cases:

- content management

- web serving

- data sharing

- Wordpress

Tip

Once you create an EFS file system, you cannot change its encryption setting.

This means that you cannot modify an unencrypted file system to make it encrypted. Instead, you need to create a new, encrypted file system.

Tip

Mount command: mount –t nfs servername:folderpath /mountpoint

EFS Performance

- EFS Scale

- 1000s of concurrent NFS clients, 10 GB+/s throughput

- Grow to Petabyte-scale network file system, automatically

- Performance mode (set at EFS creation time)

- General purpose(default): latency-sensitive use cases(webserver, CMS, etc...)

- Max I/O – higher latency, throughput, highly parallel (big data, media processing, etc...)

- Throughput mode

- Bursting (1TB = 50MiB/s + burst of up to 100MiB/s)

- Provisioned:set your throughput regardless of storage size,ex: 1GiB/s for 1TB storage

EFS Storage Classes

- Storage Tiers (lifecycle management feature – move file after N days)

- Standard: for frequently accessed files

- Infrequent access (EFS-IA): cost to retrieve files, lower price to store. Enable EFS-IA with a Lifecycle Policy

- Availability and durability

- Standard: Multi-AZ, great for prod

- One Zone: One-AZ, great for dev, backup enabled by default, compatible with IA (EFS One Zone-IA)

Tip

Amazon EFS Standard-IA storage class can be used to store data that is infrequently accessed but requires high availability and durability.

With Amazon EFS Standard-IA storage class, data is stored redundantly across multiple AZ.

Mount Targets

- A mount target provides an IP address for an NFSv4 endpoint at which you can mount an Amazon EFS file system.

- You mount your file system using its Domain Name Service (DNS) name, which resolves to the IP address of the EFS mount target in the same Availability Zone as your EC2 instance.

- You can create one mount target in each Availability Zone in an AWS Region

- If there are multiple subnets in an Availability Zone in your VPC, you create a mount target in one of the subnets. Then all EC2 instances in that Availability Zone share that mount target.

Amazon FSx

- Launch fully managed 3rd party high-performance file systems on AWS

- Fully managed service

- Amazon FSx for Windows File Server: A fully managed, highly reliable, and scalable native Windows shared file system

- Built on Windows File Server

- Full supports SMB protocol & Windows NTFS

- Integrated with Microsoft Active Directory

- Can be accessed from AWS or your on-premise infrastructure

- Supports Windows-native file system features:

- Access Control Lists (ACLs), shadow copies, and user quotas

- NTFS file systems that can be accessed from up to thousands of compute instances using the SMB protocol

- High availability: replicates data within an Availability Zone (AZ)

- Multi-AZ: file systems include an active and standby file server in separate AZs

- Amazon FSx for Lustre: A fully managed, high-performance, scalable file storage for High Performance Computing (HPC)

- High-performance file system optimized for fast processing of workloads such as:

- Machine learning

- High performance computing (HPC)

- Video processing

- Financial modeling

- Electronic design automation (EDA)

- Works natively with S3, letting you transparently access your S3 objects as files

- Your S3 objects are presented as files in your file system, and you can write your results back to S3

- Provides a POSIX-compliant file system interface

- High-performance file system optimized for fast processing of workloads such as:

Tip

The name Lustre is derived from "Linux" and "cluster"

Note

With Multi-AZ deployment, active and standby servers are placed in separate AZ. Data written to an active server is synchronously replicated to standby servers.

With Synchronous replication, data is written to active and standby servers simultaneously, while with asynchronous replication, there might be a lag between data written to active servers and standby servers.

Synchronous Replication is advantageous during failover where standby servers are in sync with active servers.

ELB & ASG

ELB: Elastic Load Balancing

ASG: Auto Scaling Group

High Availability & Scalability

- Vertical Scaling: Increase instance size (scale up / down)

- Horizontal Scaling: Increase number of instances (scale out / in)

- High Availability:

- Usually goes hand in hand with horizontal scaling

- Run instances for the same application across multi AZ

- Auto Scaling Group multi AZ

- Load Balancer multi AZ

- The goal of high availability is to survive a data center loss

- The high availability can be passive(exp. RDS Multi AZ)

- The high availability can be active(for horizontal scaling)

Scalability vs Elasticity vs Agility

Scalability: ability to accommodate a larger load by making the hardware stronger (scale up), or by adding nodes (scale out)Elasticity: once a system is scalable, elasticity means that there will be some "auto-scaling" so that the system can scale based on the load.This is "cloud-friendly": pay-per-use, match demand, optimize costsAgility: new IT resources are only a click away, which means that you reduce the time to make those resources available to your developers from weeks to just minutes.

Elastic Load Balancer

- An ELB (Elastic Load Balancer) is a managed load balancer

- AWS guarantees that it will be working

- AWS takes care of upgrades, maintenance, high availability

- AWS provides only a few configuration knobs

- It costs less to setup your own load balancer but it will be a lot more effort on your end (maintenance, integrations)

Application Load Balancer

- Operates at the request level

- Layer 7(HTTP / HTTPS / gRPC) only

- Load balancing to multiple applications across machines (target groups)

- Load balancing to multiple applications on the same machine (ex: containers)

- Support for HTTP/2 and WebSocket

- Support redirects (from HTTP to HTTPS for example)

- Routing tables to different target groups:

- Routing based on path in URL (example.com/users & example.com/posts)

- Routing based on hostname in URL (one.example.com & other.example.com)

- Routing based on Query String, Headers (example.com/users ?id=123&order=false)

- Routing based on source IP

- a great fit for micro services & container-based application (example: Docker & Amazon ECS)

- Has a port mapping feature to redirect to a dynamic port in ECS

- Target Groups:

- EC2 instances (can be managed by an Auto Scaling Group) – HTTP

- ECS tasks (managed by ECS itself) – HTTP

- Lambda functions – HTTP request is translated into a JSON event

- IP Addresses – must be private IPs

- Fixed hostname (XXX.<region>.elb.amazonaws.com)

- The application servers don’t see the IP of the client directly

- The true IP of the client is inserted in the header

X-Forwarded-For - We can also get Port (

X-Forwarded-Port) and Proto (X-Forwarded-Proto)

- The true IP of the client is inserted in the header

- Use Cases:

- Web applications with L7 routing (HTTP/HTTPS)

- Microservices architectures (e.g. Docker containers)

- Lambda targets

Network Load Balancer

- Operates at the connection level

- Ultra-high performance, allows for TCP(Layer 4):

- Forward TCP & UDP traffic to your instances

- Handle millions of request per seconds

- Less latency(~100 ms)

- Requests are routed based on IP protocol data

- NLB has one static IP per AZ, and supports assigning Elastic IP (helpful for whitelisting specific IP)

- NLB nodes can have elastic IPs in each subnet

- NLB are used for extreme performance, TCP or UDP traffic

- NLBs listen on TCP, TLS, UDP or TCP_UDP

- A separate listener on a unique port is required for routing

- Not included in the AWS free tier

- Health Checks support the TCP, HTTP and HTTPS Protocols

- Target Groups

- EC2 instances

- IP Addresses – must be private IPs

- Application Load Balancer

- On-Premises

- Use Cases:

- TCP and UDP based applications

- Ultra-low latency

- Static IP addresses

- VPC endpoint services

Gateway Load Balancer

- Used in front of virtual appliances

- Example: Firewalls, Intrusion Detection and Prevention Systems, Deep Packet Inspection Systems, payload manipulation, ...

- Operates at Layer 3 (Network Layer) – listens for all packets on all ports

- Forwards traffic to the TG specified in the listener rules

- Exchanges traffic with appliances using the GENEVE protocol on port 6081

- Deploy, scale, and manage a fleet of 3rd party network virtual appliances in AWS

- Combines the following functions:

- Transparent Network Gateway – single entry/exit for all traffic

- Load Balancer – distributes traffic to your virtual appliances

- Target Groups

- EC2 instances

- IP Addresses – must be private IPs

- Use Case:

- Load balance virtual appliances such as:

- Intrusion detection systems (IDS)

- Intrusion prevention systems (IPS)

- Next generation firewalls (NGFW)

- Web application firewalls (WAF)

- Distributed denial of service protection systems (DDoS)

- Integrate with Auto Scaling groups for elasticity

- Apply network monitoring and logging for analytics

- Load balance virtual appliances such as:

Load Balancer - SSL Certificates

Note

- An SSL Certificate allows traffic between your clients and your load balancer to be encrypted in transit (in-flight encryption)

- SSL refers to Secure Sockets Layer, used to encrypt connections

- TLS refers to Transport Layer Security, which is a newer version

- Nowadays, TLS certificates are mainly used, but people still refer as SSL

- Public SSL certificates are issued by Certificate Authorities (CA)

- Comodo, Symantec, GoDaddy, GlobalSign, Digicert, Letsencrypt, etc...

- SSL certificates have an expiration date (you set) and must be renewed

- The load balancer uses an X.509 certificate (SSL/TLS server certificate)

- You can manage certificates using

ACM(AWS Certificate Manager) - You can create upload your own certificates alternatively

- HTTPS listener:

- You must specify a default certificate

- You can add an optional list of certs to support multiple domains

- Clients can use SNI (Server Name Indication) to specify the hostname they reach

- Ability to specify a security policy to support older versions of SSL /TLS (legacy clients)

SSL – Server Name Indication (SNI)

SNI solves the problem of loading multiple SSL certificates onto one web server (to serve multiple websites)

- It’s a "newer" protocol, and requires the client to indicate the hostname of the target server in the initial SSL handshake

- The server will then find the correct certificate, or return the default one

- Note:

- Only works for ALB & NLB (newer generation), CloudFront

- Does not work for CLB (older gen)

Cross-Zone Load Balancing

- Application Load Balancer

- Always on (can’t be disabled)

- No charges for inter-AZ data

- Network Load Balancer

- Disabled by default

- You pay charges ($) for inter-AZ data if enabled

Connection Draining

Time to complete "in-flight requests" while the instance is de-registering or unhealthy

- Stops sending new requests to the EC2 instance which is de-registering

- Between 1 to 3600 seconds (default: 300 seconds)

- Can be disabled (set value to 0)

- Set to a low value if your requests are short

ELB Access Logs

ELB access logs are an optional feature that can be used to troubleshoot traffic patterns and issues with traffic as it hits the ELB.

- ELB access logs capture details of requests sent to your load balancer such as:

- The time of the request

- The client IP

- Latency

- Server responses

- Access logs are stored in an S3 bucket. Log files are published every five minutes, and multiple logs can be published for the same five-minute period.

- ELB access logs also include HTTP response codes from the target.

- Disabled by default

Note

- The S3 bucket must be in the same region as the ELB.

- The bucket policy must be configured to allow access logs to write to the bucket.

- You can use tools such as Amazon Athena, Loggly, Splunk, or Sumo Logic to analyze the contents of ELB access logs.

Auto Scaling Group

- In real-life, the load on your websites and application can change

- In the cloud, you can create and get rid of servers very quickly

- The goal of an Auto Scaling Group (ASG) is to:

- Scale out (add EC2 instances) to match an increased load

- Scale in (remove EC2 instances) to match a decreased load

- Ensure we have a minimum and a maximum number of machines running

- Automatically register new instances to a load balancer

- Replace unhealthy instances

- Cost Savings: only run at an optimal capacity (principle of the cloud)

Note

Automatically scale AWS services including:

- Amazon EC2 – Launch or terminate EC2 instances

- Amazon EC2 Spot Fleets

- Amazon ECS – Adjust ECS service desired count up/down

- Amazon DynamoDB – increase provisioned RCUs/WCUs

- Amazon Aurora – Adjust the number of Read Replicas

Tip

ASG is region bound, you can’t span them across regions

Configuration of an Auto Scaling Group

- A Launch Template specifies the EC2 instance configuration:

- AMI + InstanceType

- EC2 User Data

- EBS Volumes

- Security Groups

- SSH Key Pair

- IAM Roles for your EC2 Instances

- Network + Subnets Information

- Load Balancer Information

- Configure purchase options – On-demand vs Spot

- Configure VPC and Subnets

- Attach Load Balancer

- Configure health checks for EC2 & ELB

- Group size and scaling policies

Monitoring

- It is possible to scale an ASG based on CloudWatch alarms

- An alarm monitors a metric (such as Average CPU, or a custom metric)

- Metrics such as Average CPU are computed for the overall ASG instances

Note

- Group metrics (ASG)

- Data points about the Auto Scaling group

- 1-minute granularity

- No charge

- Must be enabled

- Basic monitoring (Instances)

- 5-minute granularity

- No Charge

- Detailed monitoring (Instances)

- 1-minute granularity

- Charges apply

Scaling Strategies

- Manual Scaling: Update the size of an ASG manually

- Dynamic Scaling: Respond to changing demand

- Target Tracking Scaling

- Most simple and easy to set-up

- Example: the average ASG CPU to stay at around 40%

- Simple / Step Scaling

- When a CloudWatch alarm is triggered (example CPU > 70%), then add 2 units

- When a CloudWatch alarm is triggered (example CPU < 30%), then remove 1 unit

- Scheduled Scaling

- Anticipate a scaling based on known usage patterns

- Example: increase the min capacity to 10 at 5 pm on Fridays

- Target Tracking Scaling

- Predictive Scaling: Uses Machine Learning to predict future traffic ahead of time

Tip

Target tracking is only for unpredictable workloads

Additional Scaling Settings

- Cooldowns – Used with simple scaling policy to prevent Auto Scaling from launching or terminating before effects of previous activities are visible. Default value is 300 seconds (5 minutes)

- Termination Policy – Controls which instances to terminate first when a scale-in event occurs

- Termination Protection – Prevents Auto Scaling from terminating protected instances

- Standby State – Used to put an instance in the InService state into the Standby state, update or troubleshoot the instance

- Lifecycle Hooks – Used to perform custom actions by pausing instances as the ASG launches or terminates them

Global Applications

Route 53

DNS Terminologies

- Domain Registrar: Amazon Route 53, GoDaddy, ...

- DNS Records: A, AAAA, CNAME, NS, ...

- Zone File: contains DNS records

- Name Server: resolves DNS queries (Authoritative or Non-Authoritative)

- Top Level Domain (TLD): .com, .us, .in, .gov, .org, ...

- Second Level Domain (SLD): amazon.com, google.com, ...

Amazon Route 53

- A highly available, scalable, fully managed and Authoritative DNS

- Authoritative = the customer (you) can update the DNS records

- Route 53 is also a Domain Registrar

- Ability to check the health of your resources

- The only AWS service which provides 100% availability SLA

- 53 is a reference to the traditional DNS port

- Features:

- Domain Registration

- Hosted Zone

- Health Checks

- Traffic Flow

Route 53 – Records

- How you want to route traffic for a domain

- Each record contains:

- Domain/subdomain Name

- Record Type

- Value

- Routing Policy – how Route 53 responds to queries

- TTL – amount of time the record cached at DNS Resolvers

- Route 53 supports the following DNS record types:

- (must know)A /AAAA / CNAME / NS

A– maps a hostname to IPv4AAAA– maps a hostname to IPv6CNAME– maps a hostname to another hostname- The target is a domain name which must have an A or AAAA record

- Can’t create a CNAME record for the top node of a DNS namespace(Zone Apex) -> use

Aliasinstead - Example: you can’t create for example.com, but you can create for <www.example.com>

NS– Name Servers for the Hosted Zone- Control how traffic is routed for a domain

- (advanced)CAA/DS/MX/NAPTR/PTR/SOA/TXT/SPF/SRV

- (must know)A /AAAA / CNAME / NS

Route 53 – Hosted Zones

- A container for records that define how to route traffic to a domain and its subdomains

- Public Hosted Zones

- contains records that specify how to route traffic on the Internet (public domain names)

- Exp. application1.

mypublicdomain.com

- Private Hosted Zones

- contain records that specify how you route traffic within one or more VPCs (private domain names)

- Exp. application1.

company.internal

- You pay $0.50 per month per hosted zone

Note

When you create a hosted zone, Route 53 automatically creates a name server (NS) record and a start of authority (SOA) record for the zone.

Migration to/from Route 53

- You can migrate from another DNS provider and can import records

- You can migrate a hosted zone to another AWS account

- You can migrate from Route 53 to another registrar

- You can also associate a Route 53 hosted zone with a VPC in another account

- Authorize association with VPC in the second account

- Create an association in the second account

Records TTL(Time To Live)

- High TTL – e.g., 24 hr

- Less traffic on Route 53

- Possibly outdated records

- Low TTL – e.g., 60 sec.

- More traffic on Route 53 ($$)

- Records are outdated for less time

- Easy to change records

Note

Except for Alias records,TTL is mandatory for each DNS record

CNAME vs Alias

- Points a hostname to any other hostname (acme.example.com to zenith.example.com or to acme.example.org)

- Can redirect DNS queries to any DNS record

- ONLY FOR NON ROOT DOMAIN (aka. something.mydomain.com)

- Points a hostname to an AWS Resource (app.mydomain.com => blabla.amazonaws.com)

- Works for ROOT DOMAIN and NON ROOT DOMAIN (aka mydomain.com)

- An extension to DNS functionality

- Automatically recognizes changes in the resource's IP addresses

- Alias Record is always of type A/AAAA for AWS resources

- You can't set the TTL

- Free of charge

- Native health check

- Records Targets:

- Elastic Load Balancers

- CloudFront Distributions

- API Gateway

- Elastic Beanstalk environments

- S3 Websites

- VPC Interface Endpoints

- Global Accelerator accelerator

- Route 53 record in the same hosted zone

- You can't set an ALIAS record for an EC2 DNS name

Route 53 – Routing Policies

Note

- Define how Route 53 responds to DNS queries

- Routing:

- It’s not the same as Load balancer routing which routes the traffic

- DNS does not route any traffic, it only responds to the DNS queries

Route 53 Supports the following Routing Policies:

- Simple DNS response providing the IP address associated with a name

- Can specify multiple values in the same record

- If multiple values are returned, a random one is chosen by the client

- When Alias enabled, specify only one AWS resource

- Can’t be associated with Health Checks

- Uses the relative weights(actually use an integer between 0 and 255) assigned to resources to determine which to route to

- Assign each record a relative weight(weights don't need to sum up to 100)

- DNS records must have the same name and type

- Can be associated with Health Checks

- Use cases: load balancing between regions, testing new application versions...

- Assign a weight of 0 to a record to stop sending traffic to a resource

- If all records have weight of 0, then all records will be returned equally

- If primary is down (based on health checks), routes to secondary destination

- Health check is required on Primary

- Redirect to the resource that has the least latency close to us

- Super helpful when latency for users is a priority

- Latency is based on traffic between users and AWS Regions

- Japan users may be directed to the US (if that’s the lowest latency)

- Can be associated with Health Checks (has a failover capability)

- Different from Latency-based!

- This routing is based on user geographic location

- Specify location by Continent, Country or by US State (if there’s overlapping, most precise location selected)

- Should create a "Default" record (in case there’s no match on location)

- Use cases: website localization, restrict content distribution, load balancing, ...

- Can be associated with Health Checks

- You can use geolocation routing to create records in a private hosted zone

- Use when routing traffic to multiple resources

- Returns several IP addresses and functions as a basic load balancer

- Can be associated with Health Checks (return only values for healthy resources)

- Up to 8 healthy records are returned for each Multi-Value query

- Multi-Value is not a substitute for having an ELB

- Using Route 53 Traffic Flow feature

- Route traffic to your resources based on the geographic location of users and resources

- Ability to shift more traffic to resources based on the defined bias

- You must use Route 53 Traffic Flow to use this feature

Route 53 – Calculated Health Checks

- Combine the results of multiple Health Checks into a single Health Check

- You can use OR, AND, or NOT

- Can monitor up to 256 Child Health Checks

- Specify how many of the health checks need to pass to make the parent pass

- Usage: perform maintenance to your website without causing all health checks to fail

AWS CloudFront

Content Delivery Network (CDN):

- Improves read performance, content is cached at the edge

- Improves users experience

- 450+ Point of Presence globally (edge locations)

- DDoS protection, integration with Shield, AWS Web Application Firewall

CloudFront Origin Access Control (OAC)

- Like a Signed OAI but supports additional use cases

- AWS recommend the OAC for the following use cases:

- Amazon S3 server-side encryption with AWS KMS (SSE-KMS)

- All Amazon S3 buckets in all AWS Regions

- Dynamic requests (PUT and DELETE) to Amazon S3

- Requires an S3 bucket policy that allows the CloudFront service principal

Origins

- For distributing files and caching them at the edge

- Enhanced security with CloudFront Origin Access Control (OAC)

- OAC is replacing Origin Access Identity(OAI)

- CloudFront can be used as an ingress (to upload files to S3)

- Application Load Balancer

- EC2 instance

- S3 website (must first enable the bucket as a static S3 website)

- Any HTTP backend you want

Tip

The uses cases for origin custom headers are:

- Identifying requests from CloudFront

- Determining which requests come from a particular distribution

- Enabling cross-origin resource sharing (CORS)

- Controlling access to content

If the header names and values that you specify are not already present in the viewer request, CloudFront adds them to the origin request.

If a header is present, CloudFront overwrites the header value before forwarding the request to the origin.

Geo Restriction

- You can restrict who can access your distribution

- Whitelist: Allow your users to access your content only if they're in one of the countries on a list of approved countries.

- Blacklist: Prevent your users from accessing your content if they're in one of the countries on a blacklist of banned countries.

- The "country" is determined using a 3rd party Geo-IP database

- Use case: Copyright Laws to control access to content

CloudFront vs S3 Cross Region Replication

- Global Edge network

- Files are cached for a TTL (maybe a day)

- Great for static content that must be available everywhere

- Must be setup for each region you want replication to happen

- Files are updated in near real-time

- Read only

- Great for dynamic content that needs to be available at low-latency in few regions

Price Classes

- You can reduce the number of edge locations for cost reduction

- Three price classes:

- Price Class All: all regions – best performance

- Price Class 200: most regions, but excludes the most expensive regions

- Price Class 100: only the least expensive regions

CloudFront Caching

- Cache based on

- Headers

- Session Cookies

- Query String Parameters

- The cache lives at each CloudFront Edge Location

- You want to maximize the cache hit rate to minimize requests on the origin

- Control the TTL (0 seconds to 1 year), can be set by the origin using the Cache-Control header, Expires header...

- You can invalidate part of the cache using the CreateInvalidation API

Tip

- You can define a maximum TTL and a default TTL

- TTL is defined at the behavior level -> This can be used to define different TTLs for different file types

- After expiration, CloudFront checks the origin for any new requests (check if the file is the latest version)

- Headers can be used to control the cache:

Cache-Control max-age=(seconds)- specify how long before CloudFront gets the object again from the origin serverExpires– specify an expiration date and time

- When the TTL on a file expires, CloudFront forwards the next incoming request to the origin server. If CloudFront has the latest version, the origin returns the status code

304 Not Modified.

CloudFront Signed URL vs S3 Pre-Signed URL

- Allow access to a path, no matter the origin

- Account wide key-pair, only the root can manage it

- Can specify beginning and expiration date and time, IP addresses/ranges of users

- Can leverage caching features

- Issue a request as the person who pre-signed the URL

- Uses the IAM key of the signing IAM principal

- Limited lifetime

Note

Signed URLs should be used for individual files and clients that don’t support cookies

CloudFront Signed Cookies

- Similar to Signed URLs

- Use signed cookies when you don’t want to change URLs

- Can also be used when you want to provide access to multiple restricted files (Signed URLs are for individual files)

CloudFront Access Logs

- Contain detailed information about every user request that CloudFront receives at Edge Locations

- Known as standard logs and stored in S3

- Can log separately for different distributions

- Can also enable real-time logs which are recorded in real time (within seconds)

- Real-time logs can be used to monitor, analyze, and take action based on content delivery performance

- Edge function logs record requests processed by Lambda@Edge and CloudFront Functions

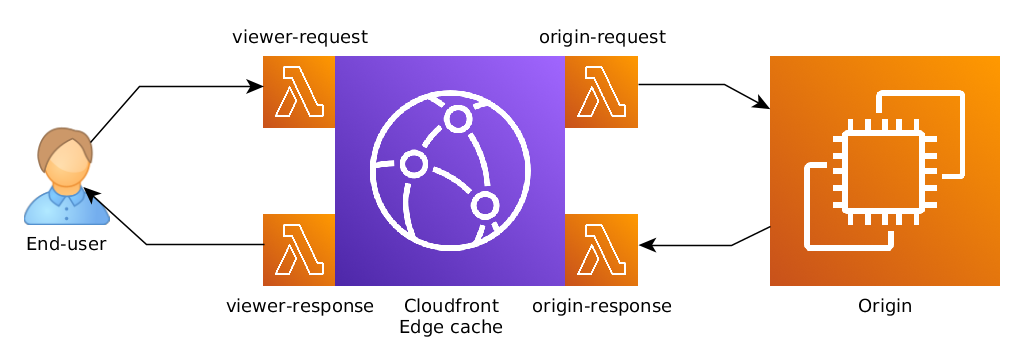

Lambda@Edge

- Lambda functions written in NodeJS or Python

- Scales to 1000s of requests/second

- Used to change CloudFront requests and responses:

- Viewer Request – after CloudFront receives a request from a viewer

- Origin Request – before CloudFront forwards the request to the origin

- Origin Response – after CloudFront receives the response from the origin

- Viewer Response – before CloudFront forwards the response to the viewer

- Use Cases:

- Website Security and Privacy

- Dynamic Web Application at the Edge

- Search Engine Optimization (SEO)

- Intelligently Route Across Origins and Data Centers

- Bot Mitigation at the Edge

- Real-time Image Transformation

- A/B Testing

- User Authentication and Authorization

- User Prioritization

- User Tracking and Analytics

S3 Transfer Acceleration

Increase transfer speed by transferring file to an AWS edge location which will forward the data to the S3 bucket in the target region

AWS Global Accelerator

- Improve global application availability and performance using the AWS global network

- Leverage the AWS internal network to optimize the route to your application (60% improvement)

- 2 Anycast IP are created for your application and traffic is sent through Edge Locations

- The Edge locations send the traffic to your application

Tip

- Unicast IP: one server holds one IP address

- Anycast IP: all servers hold the same IP address and the client is routed to the nearest one

- Works with Elastic IP, EC2 instances, ALB, NLB, public or private

- Consistent Performance

- Intelligent routing to lowest latency and fast regional failover

- No issue with client cache (because the IP doesn’t change)

- Internal AWS network

- Health Checks

- Global Accelerator performs a health check of your applications

- Helps make your application global (failover less than 1 minute for unhealthy)

- Great for disaster recovery (thanks to the health checks)

- Security

- only 2 external IP need to be whitelisted

- DDoS protection thanks to AWS Shield

AWS Global Accelerator vs CloudFront

- They both use the AWS global network and its edge locations around the world

- Both services integrate with AWS Shield for DDoS protection.

- Improves performance for your cacheable content (such as images and videos)

- Dynamic content(such as API acceleration and dynamic site delivery)

- Content is served at the edge

- Improves performance for a wide range of applications over TCP or UDP

- No caching, proxying packets at the edge to applications running in one or more AWS Regions

- Good fit for non-HTTP use cases, such as gaming(UDP), IoT(MQTT),or Voice over IP

- Good for HTTP use cases that require static IP addresses, deterministic, fast regional failover

AWS Outposts

- Hybrid Cloud: businesses that keep an on-premises infrastructure alongside a cloud infrastructure

- Therefore, two ways of dealing with IT systems:

- One for the AWS cloud (using the AWS console, CLI, and AWS APIs)

- One for their on-premises infrastructure

- AWS Outposts are "server racks" that offers the same AWS infrastructure, services, APIs & tools to build your own applications on-premises just as in the cloud

- AWS will setup and manage "Outposts Racks" within your on-premises infrastructure and you can start leveraging AWS services on-premises

- You are responsible for the Outposts Rack physical security

- Benefits:

- Low-latency access to on-premises systems

- Local data processing

- Data residency

- Easier migration from on-premises to the cloud

- Fully managed service

AWS WaveLength

WaveLength Zones are infrastructure deployments embedded within the telecommunications providers’ datacenters at the edge of the 5G networks

- Brings AWS services to the edge of the 5G networks (Example:EC2,EBS,VPC...)

- Ultra-low latency applications through 5G networks

- Traffic doesn’t leave the Communication Service Provider’s (CSP) network

- High-bandwidth and secure connection to the parent AWS Region

- No additional charges or service agreements

- Use cases:

- Smart Cities

- ML-assisted diagnostics

- Connected Vehicles

- Interactive Live Video Streams

- AR/VR

- Real-time Gaming

- ...

AWS Local Zones

- Places AWS compute, storage, database, and other selected AWS services closer to end users to run latency-sensitive applications

- Extend your VPC to more locations – "Extension of an AWS Region"

- Compatible with EC2, RDS, ECS, EBS, ElastiCache, Direct Connect ...

VPC

A VPC is a logically isolated, software-defined portion of the AWS cloud within a region

Note

- CIDR block size can be between /16 and /28

- The CIDR block must not overlap with any existing CIDR block that's associated with the VPC

- You cannot increase or decrease the size of an existing CIDR block, but you can add a secondary CIDR block to an existing VPC

Tip

CIDR stands for Classless Inter-Domain Routing

Subnet(IPv4)

- AWS reserves 5 IP addresses (first 4 & last 1) in each subnet

- These 5 IP addresses are not available for use and can’t be assigned to an EC2 instance

Example

if CIDR block 10.0.0.0/24, then reserved IP addresses are:

- 10.0.0.0 – Network Address

- 10.0.0.1 – reserved by AWS for the VPC router

- 10.0.0.2 – reserved by AWS for mapping to Amazon-provided DNS

- 10.0.0.3 – reserved by AWS for future use

- 10.0.0.255 – Network Broadcast Address. AWS does not support broadcast in a VPC, therefore the address is reserved

VPC & Subnets Primer

- VPC - Virtual Private Cloud: private network to deploy your resources (regional resource)

- Subnets allow you to partition your network inside your VPC (Availability Zone resource)

- A public subnet is a subnet that is accessible from the internet

- A private subnet is a subnet that is not accessible from the internet

- To define access to the internet and between subnets, we use Route Tables.

Internet Gateway

- Allows resources (e.g., EC2 instances) in a VPC connect to the Internet

- It scales horizontally and is highly available and redundant

- Must be created separately from a VPC

- One VPC can only be attached to one IGW and vice versa

Tip

Internet Gateways on their own do not allow Internet access... -> Route tables must also be edited! IPv6 only (egress) : Internet Gateway IPv4 only (ingress) : NAT Gateway

Bastion Hosts

- We can use a Bastion Host to SSH into our private EC2 instances

- The bastion is in the public subnet which is then connected to all other private subnets

- Bastion Host security group must allow inbound from the internet on port 22 from restricted CIDR

- Security Group of the EC2 Instances must allow the Security Group of the Bastion Host, or the private IP of the Bastion host

NAT Instance

NAT = Network Address Translation

- Allows EC2 instances in private subnets to connect to the Internet

- Must be launched in a public subnet

- Must disable EC2 setting:

Source/destinationCheck - Must have Elastic IP attached to it

- RouteTables must be configured to route traffic from private subnets to the NAT Instance

NAT Gateway

AWS-managed NAT, higher bandwidth, high availability, no administration

- Pay per hour for usage and bandwidth

- NATGW is created in a specific Availability Zone, uses an Elastic IP

- Can’t be used by EC2 instance in the same subnet (only from other subnets)

- Requires an IGW (Private Subnet => NATGW => IGW)

- 5 Gbps of bandwidth with automatic scaling up to 45 Gbps

- No Security Groups to manage / required

Tip

Ensure that your connection is using a TCP, UDP, or ICMP protocol only.

Network Access Control List(NACL)

NACL are like a firewall which control traffic from and to subnets

- One NACL per subnet, new subnets are assigned the Default NACL

- You define NACL Rules:

- Rules have a number (1-32766), higher precedence with a lower number

- First rule match will drive the decision

- The last rule is an asterisk (*) and denies a request in case of no rule match

- AWS recommends adding rules by increment of 100

- Newly created NACLs will deny everything

- NACL are a great way of blocking a specific IP address at the subnet level

Default NACL

- Accepts everything inbound/outbound with the subnets it’s associated with

- Do NOT modify the Default NACL, instead create custom NACLs

Ephemeral Ports

- For any two endpoints to establish a connection, they must use ports

- Clients connect to a defined port, and expect a response on an ephemeral port

- Different Operating Systems use different port ranges, examples:

- IANA & MS Windows10 -> 49152–65535

- Many Linux Kernel -> 32768–60999

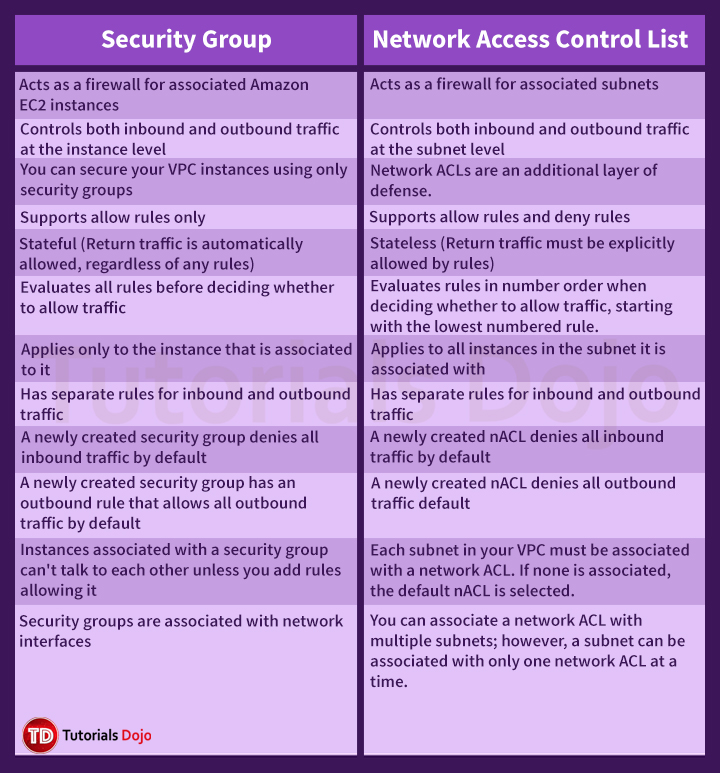

Network ACL VS Security Groups

- A firewall that controls traffic to and from an ENI(Elastic Network Interface) / an EC2 Instance

- Can have only ALLOW rules

- Is stateful: Return traffic is automatically allowed, regradless of any rules

- All rules evaluated before deciding whether to allow traffic

- Applies to an instance only if someone specifies the security when launching the instance or associate the security group with the instance later on

- Rules include IP addresses and other security groups

- Security Groups can be applied to instances in any subnet

- A firewall which controls traffic from and to subnet

- Can have ALLOW and DENY rules

- Is stateless: Return traffic must be explicitly allowed by rules

- Rules processed in number order when deciding whether to allow traffic

- Are attached at the Subnet level

- Rules only include IP addresses

Tip

- A stateful firewall allows the return traffic automatically

- A stateless firewall checks for an allow rule for both connections

VPC Flow Logs

Capture information about the IP traffic flowing in or out of network interfaces in a VPC:

- VPC Flow Logs

- Subnet Flow Logs

- Elastic Network Interface Flow Logs

- Helps to monitor & troubleshoot connectivity issues

- Example:

- Subnets to internet

- Subnets to subnets

- Internet to subnets

- Captures network information from AWS managed interfaces too: Elastic Load Balancers, ElastiCache, RDS, Aurora, etc...

- VPC Flow logs data can go to S3 bucket / CloudWatch Logs

- Flow logs can be created at the following levels:

- VPC

- Subnet

- Network interface

Tip

Flow logs do not provide the ability to view a real-time stream of traffic. Logs are published every 10 minutes by default but can be configured for faster delivery.

Warning

After you create a flow log, you CANNOT change its configuration or the flow log record format

VPC to VPC Connectivity Options

- VPC Peering

- AWS Transit Gateway

- Software S2S VPN

- Software VPN to AWS VPN

- AWS Managed VPN

- AWS PrivateLink

VPC Peering

Connect two VPC, privately using AWS’s network

- Make them behave as if they were in the same network

- Must not have overlapping CIDR (IP address range)

- VPC Peering connection is not transitive (must be established for each VPC that need to communicate with one another)

- You must update route tables in each VPC’s subnets to ensure EC2 instances can communicate with each other

- VPC Peering enables routing using private IPv4 or IPv6 addresses

Tip

- You can create VPC Peering connection between VPCs in different AWS accounts/regions

- You can reference a security group in a peeredVPC (works cross accounts – same region)

- You cannot reference the security group of a peer VPC that's in a different Region. Instead, use the CIDR block of the peer VPC

- BGP protocol uses TCP port 179 for establishing a peering connection. While establishing AWS VPN connectivity using BGP protocol, it needs to be checked that TCP port 179 is not blocked in the network

VPC Endpoints

VPC Endpoints (powered by AWS PrivateLink) allow resources inside a VPC to connect to other AWS Services outside the VPC using a private network instead of the public www network

- Gives you enhanced security and lower latency to access AWS services

- Redundant and scale horizontally

- Remove the need of IGW, NATGW, ... to access AWS Services

- VPC Endpoint Gateway: S3 & DynamoDB

- VPC Endpoint Interface: the rest

- Only used within your VPC

Types of Endpoints

- An ENI within an AWS VPC that has a private IP address within the VPC subnet of the resources that are consuming the service

- Provisions an ENI (private IP address) as an entry point (must attach a Security Group)

- Uses DNS entries to redirect traffic

- Supports most AWS services(API Gateway, CloudFormation, CloudWatch etc.)

- Security: Security Groups

- $ per hour + $ per GB of data processed

- Provisions a gateway and must be used as a target in a route table (does not use security groups)

- Uses prefix lists in the route table to redirect traffic

- Supports both S3 and DynamoDB

- Security: VPC Endpoint Policies

- Free

Site to Site VPN & Direct Connect

- AWS VPN is a managed IPSec VPN

- Connect an on-premises VPN to AWS

- The connection is automatically encrypted

- Supports static routes or BGP peering/routing

- Goes over the public internet

- A VGW(Virtual Private Gateway) is deployed on the AWS site

- A CGW(Customer Gateway) is deployed on the customer side

- DX is a physical fibre connection between on-premises and AWS running at 1Gbps or 10Gbps or 100Gbps

- A cross-connect between the AWS DX router and the customer/partner DX router

- A DX port (1000-Base-LX or 10GBASE-LR) must be allocated in a DX location

- The customer router is connected to the DX router in the DX location

- The connection is private, secure and fast

- Goes over a private network

- Takes at least a month to establish

- Speeds from 50Mbps to 500Mbps can also be accessed via an APN partner

- DX Connections are NOT encrypted!

- Use an

IPSec S2S VPNconnection over a VIF to add encryption in transit

Note

Site-to-site VPN and Direct Connect cannot access VPC endpoints

Note

- A VIF is a virtual interface (802.1Q VLAN) and a BGPsession

- A Private VIF connects to a single VPC in the same AWS Region using a VGW

- A Public VIF can be used to connect to AWS Public services in any Region (but not the Internet)

- Multiple Private VIFs can be used to connect to multiple VPCs in the Region

- VIFs can also be shared with other AWS accounts – known as hosted VIFs

AWS VPN CloudHub

- A VGW is deployed on the AWS site

- Network traffic may go between a VPC and a remote office

- Network traffic between offices can also be routed over the IPSec VPN

- Remote offices connect to the VGW in a hub-and-spoke mode

- Each office must use a unique BGP ASN

Transit Gateway

Transit Gateway is a network transit hub that interconnects VPCs and on-premises networks

- For having transitive peering between thousands of VPC and on-premises, hub-and-spoke (star) connection

- One single Gateway to provide this functionality

- TGWs can be attached to VPNs, Direct Connect Gateways, 3rd party appliances and TGWs in other Regions/accounts

Note

Connections supported by a transit gateway:

- VPN to a physical datacenter

- Direct Connect Gateway

- Transitive connections between multiple VPCs

Tip

- VPC Peering does not have an aggregate bandwidth limitation.

- Transit gateway connections to a VPC provide up to 50 Gbps of bandwidth.

- A VPN connection provides a maximum throughput of 1.25 Gbps.

IPv6 in VPC

- IPv4 cannot be disabled for your VPC and subnets

- All IPv6 addresses are publicly routable (no NAT)

- You can enable IPv6 (they’re public IP addresses) to operate in dual-stack mode

- Your EC2 instances will get at least a private internal IPv4 and a public IPv6

- They can communicate using either IPv4 or IPv6 to the internet through an Internet Gateway

Egress-only Internet Gateway